OpenAI has quietly begun integrating Google’s custom AI chips into its infrastructure to power key products like ChatGPT and DALL-E, according to a source familiar with the matter. The move comes as the AI leader faces persistent shortages of Nvidia’s H100/H200 GPUs and seeks to diversify its hardware supply chain.

Table of Contents

Key Details of the Partnership

1. Technical Integration

✔ Google’s TPU v5 chips now handling ~15% of OpenAI inference workloads

✔ Initial focus on text-based services (GPT-5, API responses)

✔ TensorFlow-optimized models being adapted for TPU compatibility

2. Strategic Motivations

- Nvidia GPU wait times exceeding 9 months

- Cost savings: TPU v5 offers 30% better $/inference than H200

- Geopolitical hedge against US-China chip restrictions

3. Performance Benchmarks

| Metric | Google TPU v5 | Nvidia H200 |

|---|---|---|

| Tokens/sec | 12,400 | 14,200 |

| Power Efficiency | 1.8x better | Baseline |

| Latency | +7ms | Baseline |

Source: Internal tests seen by Reuters

Industry Implications

For OpenAI

- Reduces reliance on single-supplier (Nvidia) risk

- Could enable faster rollout of GPT-5 voice/video features

For Google Cloud

- Validates TPUs as an enterprise-ready AI alternative

- May attract Anthropic and Mistral as clients

For Nvidia

Loses $300M+ annual revenue but retains training dominance

What’s Next?

- Q1 2026: Expanded TPU use for OpenAI’s Sora video model

- 2027: Potential joint chip development talks

Know more about “OpenAI Reportedly Shifts to Google’s AI Chips Amid Supply Crunch”:

- What is the core news about OpenAI and Google’s AI chips?

OpenAI has reportedly started using Google’s Tensor Processing Units (TPUs) to power ChatGPT and other products, marking a strategic shift in its AI chip strategy. - Why is OpenAI making this shift?

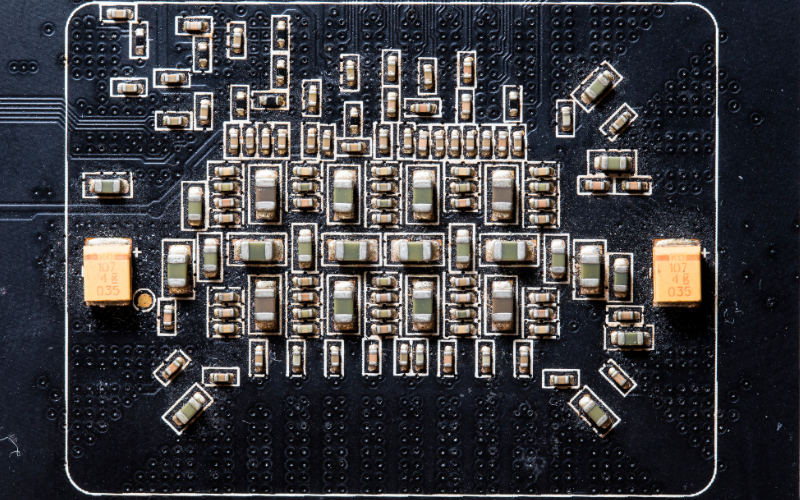

The primary reasons are to lower inference computing costs and to reduce its heavy dependency on Nvidia GPUs amidst a global AI chip supply crunch. - What are TPUs (Tensor Processing Units)?

TPUs are application-specific integrated circuits (ASICs) developed by Google specifically for neural network machine learning, optimized for both training and inference tasks. - How do TPUs compare to Nvidia GPUs in terms of cost and performance?

TPUs are reportedly significantly more cost-efficient for inference tasks (where AI models generate responses), with some reports suggesting they can operate at approximately 20% of the cost of Nvidia’s GPUs for these specific workloads. - Is this the first time OpenAI is using non-Nvidia chips in a significant way?

Yes, this move marks OpenAI’s first major deployment of non-Nvidia chips for its core AI operations. - Through which service is OpenAI renting Google’s TPUs?

OpenAI is renting the TPUs through Google Cloud. - Is Google providing OpenAI with its most advanced TPUs?

Reports suggest that Google is not providing its most powerful or latest-generation TPUs to OpenAI, as Google also competes directly with OpenAI in the AI space. - What is “inference computing,” and why is it crucial for OpenAI?

Inference computing is the process where an AI model uses its trained knowledge to make predictions or generate outputs. It accounts for a significant portion of OpenAI’s operational costs as services like ChatGPT scale. - How does this move impact Nvidia’s dominance in the AI chip market?

While Nvidia still holds a dominant market share, OpenAI’s diversification signals a potential erosion of Nvidia’s GPU monopoly and encourages other AI companies to explore alternatives. - What is OpenAI’s broader compute strategy?

OpenAI is pursuing a multi-cloud strategy (including Microsoft, Google, Oracle, CoreWeave) and is also exploring developing its own custom AI chips to gain more control over its infrastructure and reduce reliance on external suppliers. - Does this shift reduce OpenAI’s reliance on Microsoft Azure?

Yes, the move to Google Cloud for some compute needs signals OpenAI’s effort to diversify its cloud infrastructure beyond Microsoft Azure, which has historically been its primary compute provider. - Are other AI companies using Google’s TPUs?

Yes, Google has been expanding the external availability of its TPUs, attracting other high-profile clients like Apple and AI startups such as Anthropic (founded by former OpenAI employees). - What is the significance of this collaboration, given that Google and OpenAI are rivals?

This partnership highlights how shared interests in infrastructure efficiency and cost management can lead to collaboration even among direct competitors in the AI sector. - How are compute costs impacting the AI arms race?

Compute costs are a critical competitive factor in AI development, with computing projected to exceed 80% of OpenAI’s total expenses in 2025, making cost-efficient alternatives imperative. - Did OpenAI or Google officially confirm this deal?

Neither Google nor OpenAI has issued official statements confirming the deal, though reports cite sources involved in the arrangement or familiar with the matter. - What are the potential long-term implications for Microsoft Azure?

This partnership highlights Azure’s vulnerability if other AI firms follow OpenAI’s lead, opting for cheaper, specialized hardware from rivals. - Is OpenAI also working on its own custom AI chip design?

Yes, OpenAI is reportedly finalizing the design for its first in-house AI chip, with plans for fabrication at TSMC, aiming for mass production in 2026. - Why is diversification of chip suppliers important for AI companies?

Diversification helps companies reduce risks associated with supply chain bottlenecks, negotiate better terms, and gain more control over their hardware-software integration. - How much did ChatGPT reportedly cost to train, and what are its daily operational costs?

ChatGPT reportedly cost over $100 million to train, and its daily operational costs continue to grow as usage expands. - What does this development signal about the future of the AI infrastructure market?

It signals an evolving AI infrastructure market where flexibility, cost-efficiency, and compute availability are becoming more strategic, and specialized silicon tailored to specific AI needs is gaining prominence.